The pilot worked. Your prototype agent successfully navigated the CRM and drafted a perfect outreach email. But operationalizing Agentic AI—moving it from a controlled experiment to a live enterprise environment—is where most organizations fail.

This is the most dangerous moment in your AI journey. Companies are investing heavily in operationalizing agentic AI, but many face significant challenges bridging the gap between AI investment and true operational maturity. To succeed, organizations must evolve their strategies, architectures, and operational processes to keep pace with the rapidly advancing capabilities of agentic AI.

In my foundational guide, Agentic AI: The Next Frontier in Autonomous Enterprise Intelligence, I explored how these systems differ from standard chatbots in their ability to act. But as I warned in my recent article on Shadow AI Risks, that ability to act creates a “blast radius” that traditional software does not have.

If you scale without guardrails, you aren’t innovating—you are just industrializing risk. As we look to the future, operationalizing agentic AI will become a defining factor in enterprise operations and service innovation.

Here is my 5-step roadmap for CIOs to navigate “Pilot Purgatory” and deploy agents that are safe, scalable, and sanctioned.

Introduction to Operationalizing Agentic AI

Operationalizing agentic AI marks a pivotal evolution in how organizations harness intelligence and automation to achieve business outcomes. Unlike traditional automation or even generative AI, agentic AI leverages autonomous agents and intelligent software agents that can reason, adapt, and collaborate across complex enterprise environments. These agents are not limited to following static scripts—they perceive their environment, interpret real-time data, and make informed decisions that align with business goals.

For IT leaders and enterprise architects, this shift means moving beyond isolated AI experiments to embedding intelligence directly into the fabric of business operations. By operationalizing agentic AI, organizations can redesign decision-making processes, enhance adaptability, and drive innovation at scale, ultimately unlocking new levels of efficiency and value across the enterprise.

Understanding the Benefits and Challenges

The promise of agentic AI lies in its ability to transform decision-making, streamline operations, and deliver measurable business value. By operationalizing agentic AI, organizations can automate complex tasks, reduce cognitive load for teams, and accelerate progress toward strategic business goals.

Early adopters have reported significant gains in decision automation and efficiency, as intelligent agents act and adapt in real time to changing business needs. However, realizing these benefits is not without challenges. Most organizations encounter hurdles not because of a lack of AI capabilities, but due to the complexity of integrating these new systems with existing data, technology, and human workflows.

Robust governance frameworks (such as the NIST AI Risk Management Framework), disciplined architecture, and ongoing human validation are essential to ensure that agentic AI delivers value safely and reliably. Navigating these challenges requires a thoughtful approach to scale, compliance, and operational clarity, making governance and architecture as critical as the AI technology itself.

The Role of AI Copilots

AI copilots have become a familiar tool for many organizations, offering quick answers, automating repetitive tasks, and boosting team productivity. Yet, despite early wins, most organizations find that the impact of AI copilots plateaus over time. The root cause is often architectural: traditional AI copilots operate outside the core systems they are meant to assist, lacking direct access to application state, user context, and operational constraints. This limits their ability to execute tasks autonomously or adapt to complex scenarios.

Agentic AI introduces a new generation of AI systems that combine autonomous reasoning with direct execution capabilities. By embedding these intelligent agents within enterprise systems, organizations can move beyond the limitations of AI copilots, enabling more robust, context-aware, and integrated AI solutions that deliver sustained business impact.

Creating a Support Fabric

To fully realize the potential of agentic AI at enterprise scale, organizations must design a comprehensive support fabric that embeds intelligence directly into operational workflows. This involves treating context as a foundational architectural element—integrating user state, product context, and session intent into a unified reasoning layer.

By leveraging institutional knowledge and continuously evaluating user needs, agentic AI systems can proactively guide users, often anticipating requirements before they arise. Safe actionability is paramount: interventions are carefully controlled within explicit boundaries, ensuring that agents act only when conditions are met, and risks are managed.

This approach enables organizations to recognize and address user needs in real time, delivering support and guidance that is both timely and secure, while maintaining control over execution and compliance.

Service Innovation and AI

Service innovation is rapidly evolving as organizations seek to deliver greater value through smarter products, platforms, and support systems. Today’s service delivery extends far beyond traditional help desks, encompassing product interfaces, cloud platforms, security controls, and operational tooling. Real-time, context-driven decisions increasingly rely on advanced AI capabilities.

Agentic AI systems represent a new architectural paradigm, moving from standalone assistants to deeply embedded, agentic systems that operate as integral parts of the platform. These intelligent agents continuously observe application state, user behavior, and operational signals, reasoning over this context to guide, recommend, or act—always within well-defined governance frameworks.

For service innovation leaders, this shift means focusing on system integration, operational clarity, and robust governance as much as on AI sophistication. As agentic AI matures, intelligence will become seamlessly woven into workflows, making support and guidance an invisible, yet powerful, part of the user experience and setting the stage for the next generation of intelligent enterprises.

A 5-Step Roadmap for Operational Success

Operationalizing agentic AI is not a singular event but a phased journey of increasing maturity. To navigate “Pilot Purgatory” successfully, organizations need a structured approach that balances the autonomy of agents with the strict controls of enterprise governance. The following five steps provide a blueprint for moving from experimental prototypes to fully sanctioned, production-ready systems, ensuring that innovation never comes at the expense of security.

Step 1: Operationalizing Agentic AI Requires “Safe Enablement”

Before you expand your pilot, you must redefine what “success” looks like. In the Generative AI era, success was simply “user adoption.” When operationalizing Agentic AI, success is defined by “Safe Enablement”.

You cannot rely on blocking ports or restrictive policies; employees will simply bypass them. The hard part of operationalizing agentic AI is addressing the challenges of trust, governance, and reliability that come with automation and autonomous decision-making. Instead, you must establish a “Green Light” checklist. An agent should only move to production if it meets specific criteria:

- Identity: Does the agent have a distinct non-human identity in Active Directory?

- Scope: Is the agent’s domain clearly defined (e.g., “Read-only access to Leads,” “No Write access to Invoices”)?

- Reversibility: If the agent executes a bad transaction, can it be rolled back instantly?

Step 2: Architect for the “Kill Switch”

In my “3-Layer Defense Model,” the second layer consists of Operational Guardrails. The most critical of these is the Kill Switch.

Unlike traditional software that waits for a command, autonomous agents operate in continuous loops: perceiving, reasoning, and acting. If an agent enters a hallucination loop in production (a risk formally classified by OWASP as Excessive Agency), it can spiral out of control in seconds—deleting records or flooding APIs.

The Implementation Requirement: Every agent deployed in production must inherit a hard-coded Kill Switch. Trust frameworks are essential here, as they ensure that kill switches and other guardrails are implemented in a way that aligns with compliance requirements and business objectives. This is not just a “pause” button; it is a disconnect mechanism that severs the agent’s access to network resources immediately upon detecting erratic behavior (like a sudden spike in API calls).

Step 3: Shift from “Human-in-the-Loop” to “Human-on-the-Loop”

One of the biggest friction points in scaling AI is the bottleneck of human approval. If a human must approve every action, you haven’t really automated anything.

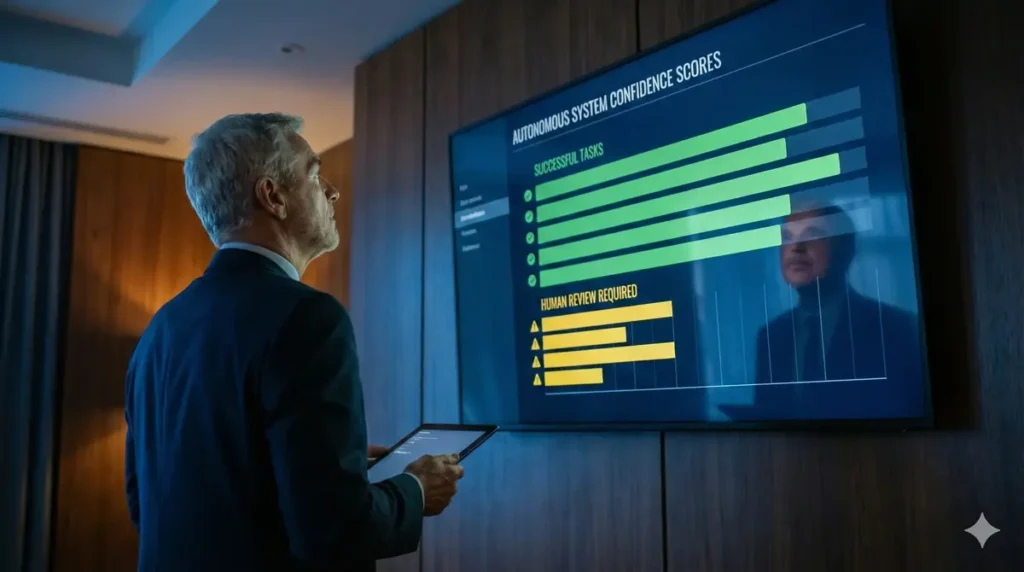

To operationalize effectively, you must transition to a Human-on-the-Loop model. It’s important to treat agents as digital teammates, focusing on their intent, scope, and collaboration capabilities, rather than just as tools, to build trust and enable effective integration within your organization.

- Human-in-the-Loop: The AI waits for permission to act. (Good for Pilots).

- Human-on-the-Loop: The AI acts autonomously within set parameters, while humans monitor the outcomes and intervene only on exceptions.

How to Scale This: Configure your agents with “Confidence Thresholds.”

- High Confidence (95%+): The agent executes the task autonomously.

- Low Confidence (< 90%) or High Value ($10k+): The agent triggers an exception and waits for human review.

Step 4: Enforce RBAC (Role-Based Access Control)

A common mistake among pilots is giving the agent “Admin” access to make testing easier. Moving to production requires a ruthless application of Least Privilege and standard Role-Based Access Control..

Remember, Agentic AI is designed for execution. If an agent has write access to your production database, a bug isn’t just an error log—it’s data loss.

- Segregation of Duties: Assigning different agents with distinct areas of expertise to specific tasks enhances both security and operational efficiency. For example, the agent that reads the customer data should not be the same agent that deletes customer data.

- Service Accounts: Never allow an agent to “borrow” a human employee’s credentials. It needs its own audit trail so you know exactly who (or what) made a decision.

Step 5: The “Shadow” Audit

Before you flip the switch, assume you already have unauthorized agents running. As I noted in Shadow AI Risks, rogue projects often arise because sanctioned tools feel too slow.

Your official rollout is the perfect time to conduct a Shadow AI Audit. This process ensures agentic AI is safely integrated into enterprise services and workflows, embedding AI as a foundational element within business services. By offering a “sanctioned path” to production, you incentivize business units to bring their rogue projects out of the shadows.

- The Carrot: ”Migrate your home-grown agents to our secure platform, and we will handle the compute costs and security compliance.”

- The Stick: ”Any agent not registered on the platform by Q3 will be blocked by the firewall.”

Conclusion: Governance is Your Accelerator

Many leaders fear that governance slows down innovation. In reality, governance is the only thing that allows you to drive fast.

You wouldn’t drive a Ferrari without brakes. Similarly, you cannot deploy autonomous agents that execute business decisions without the ability to control, audit, and stop them. By following this roadmap, you move from the “Wild West” to a disciplined, high-performance operation. This foundation enables truly AI-powered enterprise operations, where workflows and systems are transformed by autonomous agents delivering real business value.

Stuck in Pilot Purgatory? Don’t wait for a “rogue agent” to crash your production database. At Blue Phakwe Consulting, we help CIOs build the governance frameworks required to scale safely. Book a Fractional CIO Strategy Audit with us today.

Frequently Asked Questions (FAQ)

1. What is the difference between Generative AI and Agentic AI deployment?

Generative AI (like ChatGPT) creates content, so the primary risk is accuracy or copyright. Agentic AI executes actions (like refunds or database updates), so the primary risk is operational damage. Operationalizing Agentic AI requires stricter access controls and automated “kill switches” that Generative AI typically does not need.

2. How long does it take to move an AI agent from pilot to production?

While the coding might only take weeks, the governance setup typically takes 2–3 months. This includes establishing the identity framework, testing the “Human-on-the-Loop” workflows, and passing security audits. Rushing this phase is the leading cause of “Pilot Failure.”

3. Do we really need a “Kill Switch” for internal tools?

Yes. Agents operate at machine speed. If a pricing agent hallucinates and discounts your entire inventory by 90%, it can process thousands of orders before a human notices. A Kill Switch allows you to sever the connection instantly based on anomaly detection, preventing financial disaster.

4. How do AI agents differ from traditional automation tools?

AI agents are autonomous software entities capable of making decisions and adapting to changing environments. Unlike traditional automation tools, which follow predefined rules, AI agents can collaborate within multi-agent systems to solve complex data challenges. By embedding intelligence directly into workflows, these agents enable more proactive, resilient, and autonomous operations that go beyond simple automation.

5. What is the role of large language models and language understanding in agentic AI?

Large language models and advanced language understanding are foundational to agentic AI. They enable AI agents to interpret context, reason, and communicate effectively within enterprise environments. This integration unlocks new capabilities, making agents more autonomous, context-aware, and able to handle complex, goal-directed tasks that transform organizational workflows.

6. Can you provide an example of agentic logic in practice?

For example, consider a workflow where an AI agent monitors incoming support tickets. Using agentic logic, the agent can automatically classify tickets, prioritize urgent issues, and trigger follow-up actions—such as updating a database or notifying a human operator. This can be implemented with a code snippet in JavaScript or using orchestration tools like Matillion, demonstrating how agentic logic embeds intelligence into real-world operations.